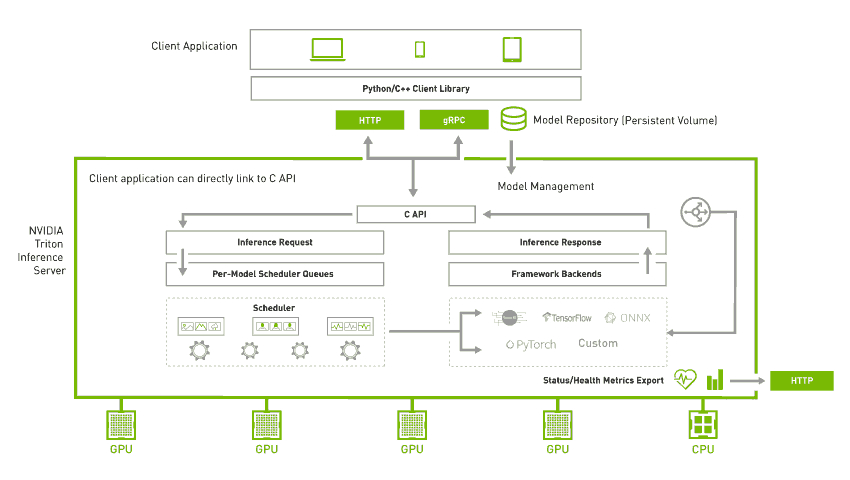

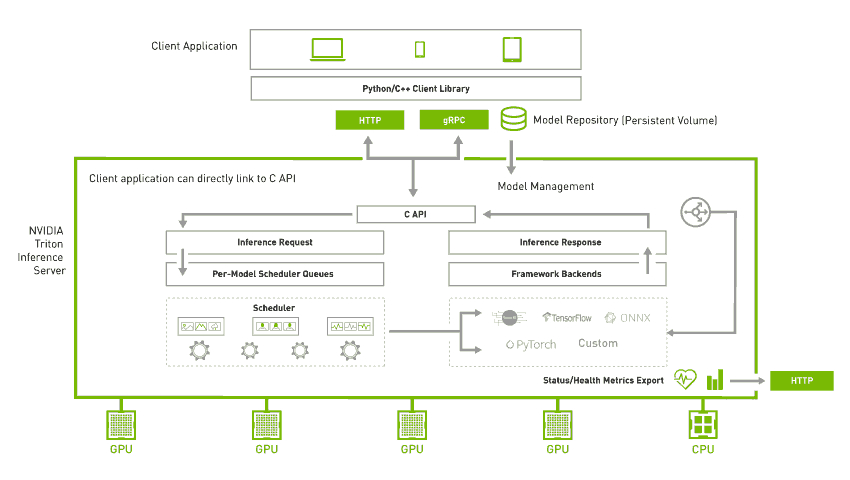

NVIDIA 的 Triton Inference Server.

把模型文件 (支持主流格式) 放在 model repo, 由 inference server 调度, 根据模型配置的推理引擎 (ONNX, TensorRT 等) 输出结果.

下面以在 CPU 上部署 ONNX 模型为例.

<!-- more -->最简单的方法是在 NVIDIA GPU Cloud (NGC) 拉取预编译的镜像.

$ docker pull nvcr.io/nvidia/tritonserver:<xx.yy>-py3

# docker pull nvcr.io/nvidia/tritonserver:22.08-py3

其中 <xx.yy> 是 Triton 版本号, 每个月末都会发新版本, 比如 2022 年 8 月末最新的版本号就是 22.08.

模型仓库存储模型文件和模型配置, 参考 model_repository 官方文档.

用 --model-repository 参数指定要使用的模型仓库路径; 这个参数可以设置多次以导入多个模型仓库.

(tritonserver 是编译出来的二进制文件, 要在 docker 里)

$ tritonserver --model-repository=<model-repository-path>

模型仓库必须符合如下结构.

<model-repository-path>/

<model-name>/

[config.pbtxt]

[<output-labels-file> ...]

<version>/

<model-definition-file>

<version>/

<model-definition-file>

...

<model-name>/

# 模型配置

config.pbtxt

# 版本以数字命名, 数字大的为新版本

# The subdirectories that are not numerically named,

# or have names that start with zero (0) will be ignored

1/

# 默认模型文件名 model.onnx

# 可在 config.pbtxt 中的 default_model_filename 修改

model.onnx

2/

model.onnx

...

模型配置 config.pbtxt 参考 model_configuration 官方文档. 特别地, Triton 可以读取 ONNX 模型里的配置 自动生成配置, 因而可以不显式提供这个文件.

A minimal model configuration must specify the platform and/or backend properties, the max_batch_size property, and the input and output tensors of the model. (好像不能写注释, 不然 Triton 报错.)

// platform: "onnxruntime_onnx" // 等价写法

backend: "onnxruntime"

max_batch_size: 20

// default_model_filename: "model.onnx"

input [

{

name: "input_ids"

data_type: TYPE_INT64

dims: [512]

},

{

name: "attention_mask"

data_type: TYPE_INT64

dims: [512]

}

]

output [

{

name: "logits"

data_type: TYPE_FP32

dims: [6]

}

]

instance_group [

{

count: 3

kind: KIND_CPU

}

]

dynamic_batching {

preferred_batch_size: [8, 16]

max_queue_delay_microseconds: 100

}

参考 backend 官方文档. A Triton backend is the implementation that executes a model. 这个 backend 也可以自己编译提供.

For using TensorRT backend, the value of this setting should be tensorrt. Similarly, for using PyTorch, ONNX and TensorFlow Backends, the backend field should be set to pytorch, onnxruntime or tensorflow respectively. For all other backends, 'backend' must be set to the name of the backend.

If the model's batch dimension is the first dimension, and all inputs and outputs to the model have this batch dimension, then Triton can use its dynamic batcher or sequence batcher to automatically use batching with the model. In this case max_batch_size should be set to a value greater-or-equal-to 1.

不支持 batching, 或者不支持上述 batching 方式时, max_batch_size 设置为 0.

Datatypes 参考 这里.

For models with max_batch_size greater-than 0, the full shape is formed as [-1] + dims. For models with max_batch_size equal to 0, the full shape is formed as dims. (-1 表示可变长维度.)

输入输出和配置文件不同时可以添加 reshape 参数.

每个模型可以有多个版本共存. Triton 提供三种版本策略, 默认 latest 1.

// 所有版本同时服务

version_policy: { all: {}}

// 只使用最近 n 个版本

version_policy: { latest: { num_versions: 2}}

// 只使用指定的版本

version_policy: { specific: { versions: [1, 3]}}

Triton can provide multiple instances of a model so that multiple inference requests for that model can be handled simultaneously. By default, a single execution instance of the model is created for each GPU available in the system.

For example, the following configuration will place one execution instance on GPU 0, two execution instances on GPUs 1 and 2, and two execution instances on the CPU.

instance_group [

{

count: 1

kind: KIND_GPU

gpus: [ 0 ]

},

{

count: 2

kind: KIND_GPU

gpus: [ 1, 2 ]

},

{

count: 2

kind: KIND_CPU

}

]

If no count is specified for a KIND_CPU instance group, then the default instance count will be 2 for selected backends (Tensorflow and Onnxruntime). All other backends will default to 1.

对于有状态的模型, 可以用 sequence batcher, 以及 ensemble batcher, 此处从略.

Dynamic batching is a feature of Triton that allows inference requests to be combined by the server, so that a batch is created dynamically. Creating a batch of requests typically results in increased throughput. The dynamic batcher should be used for stateless models. The dynamically created batches are distributed to all model instances configured for the model.

dynamic_batching {

preferred_batch_size: [4, 8] // 4 and 8

max_queue_delay_microseconds: 100

}

请求进来后形成队列, 拼成 preferred_batch_size 传给模型推理. 如果不能拼成 preferred_batch_size, 就等待至多 max_queue_delay_microseconds 毫秒. 因此这两个参数需要 trade-off, 调优方法见 原文档.

Triton is optimized to provide the best inferencing performance by using GPUs, but it can also work on CPU-only systems. In both cases you can use the same Triton Docker image.

$ docker run --rm -p8000:8000 -p8001:8001 -p8002:8002 \

-v/full/path/to/docs/examples/model_repository:/models \

nvcr.io/nvidia/tritonserver:<xx.yy>-py3 \

tritonserver --model-repository=/models

$ pip install tritonclient[all]

参考 官方 readme, 和 Python 示例 simple_grpc_shm_client.py. 仿照这个样例写了一个 更通用的脚本.

性能监控

Further reading

<details><summary><b>网友 "楷哥" 写的一系列博文</b><font color="deepskyblue"> (Show more »)</font></summary> <ul> <li>2021-10-09 <a href="https://www.cnblogs.com/zzk0/p/15384034.html">Serving System 调研</a></li> <li>2021-10-30 <a href="https://www.cnblogs.com/zzk0/p/15487465.html">我不会用 Triton 系列:Triton Inference Server 简介</a></li> <li>2021-11-01 <a href="https://www.cnblogs.com/zzk0/p/15496171.html">我不会用 Triton 系列:如何实现一个 backend</a></li> <li>2021-11-04 <a href="https://www.cnblogs.com/zzk0/p/15510825.html">我不会用 Triton 系列:Stateful Model 学习笔记</a></li> <li>2021-11-06 <a href="https://www.cnblogs.com/zzk0/p/15517120.html">我不会用 Triton 系列:Triton 搭建 ensemble 过程记录</a></li> <li>2021-11-10 <a href="https://www.cnblogs.com/zzk0/p/15535828.html">我不会用 Triton 系列:Python Backend 的使用</a></li> <li>2021-11-11 <a href="https://www.cnblogs.com/zzk0/p/15540333.html">我不会用 Triton 系列:构建 Triton Server 过程记录</a></li> <li>2021-11-11 <a href="https://www.cnblogs.com/zzk0/p/15542015.html">我不会用 Triton 系列:Rate Limiter 的使用</a></li> <li>2021-11-11 <a href="https://www.cnblogs.com/zzk0/p/15538894.html">我不会用 Triton 系列:Model Warmup 的使用</a></li> <li>2021-11-14 <a href="https://www.cnblogs.com/zzk0/p/15553394.html">我不会用 Triton 系列:Agent 的使用</a></li> <li>2021-11-18 <a href="https://www.cnblogs.com/zzk0/p/15543824.html">我不会用 Triton 系列:上手指北</a> (推荐)</li> <li>2022-02-24 <a href="https://www.cnblogs.com/zzk0/p/15932542.html">我不会用 Triton 系列:命令行参数简要介绍</a></li> <li>2022-04-11 <a href="https://www.cnblogs.com/zzk0/p/16125239.html">kserve predict api v2</a></li> </ul></details>